Computer Vision enables high-level understanding from visual data. It is a challenging problem for computers to understand the environments and reason about context from images. While deep learning-based computer vision techniques have successfully advanced applications such as biometrics, augmented reality, and driver assistive technology, it is still behind the human-level understanding of environments in real world. The lack of human-level understanding is one of the major issues as to why autonomous machines are not fully-deployed in the real world environments.

We seek to invent innovative algorithms for machines to see and interpret the world, as well as develop systems to enhance the visual systems of machines and sensors.

- 1.

Visual perception

& understanding - SAIT is developing human-level visual perception algorithms. AI-driven vision technologies would bring more accurate and robust visual perception solutions. In order to raise the level of computer vision technology from perception to understanding, we are investigating fundamental geometric information and semantics from images and videos. Understanding semantic and context will enable physical and virtual agents to interact with users naturally.

We are also investigating innovative generative models which can replace the large data requirement by synthesizing photo-realistic images and simulating the real world environments.

- 2.

Visual processing

(surpassing the physica

limitation of mobile camera) - SAIT is pursuing AI-driven technology that enables users to capture memories anytime and anywhere. Current mobile image sensors have improved image quality and resolution, but still face challenges in low-light conditions and over long distances due to physical limitations in sensor size.

We are investigating and reimagining novel algorithms, not only based on deep learning, but also motivated by human visual cortex to provide the sensors with greater sensing capabilities.

The field of natural language processing underpins many of today’s useful commercial applications like text classification, speech recognition, language modeling, machine translation, and question answering interactions with voice assistants. Human voice signals may be transcribed to corresponding text to help understand the meaning. In doing so, we can improve the applications that consumers use most, such as language translation and voice assistants.

There are many challenging problems to advance speech and language algorithms. One is accurately understanding human dialog with dramatically lower computation/communication cost than that of server-client based systems. Real-time and simultaneous processing algorithms are necessary for natural interactions between human and machines. In addition, exploiting external knowledge understanding in the speech and language algorithms is an important problem to reflect cultural trends and language evolution.

- 1.

End-to-End Models for

Automatic Speech Recognition

(ASR) - ASR models automatically decode human voice signals and provide a fundamental basis to enable natural interactions between humans and machines. ASR makes the translation, summarization, and conversation with intelligent agents more interactive and natural.

SAIT's ASR technology has been incorporated into products like Samsung Galaxy S smartphones and TVs.-

Command

Assistant

-

Intention-aware

Agent

-

Self-Learning

Agent

-

ASR Speech

-

- 2.

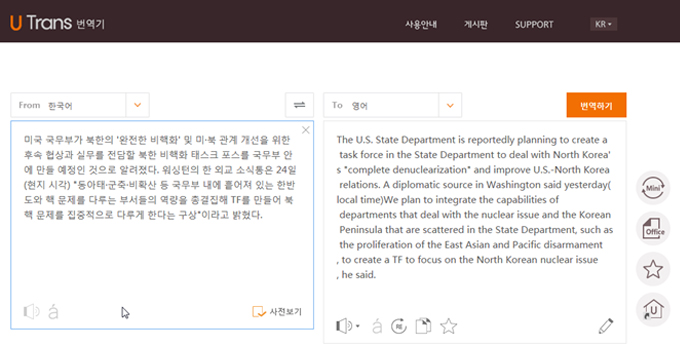

Machine Translation (MT) - MT is fully automated translation of text from one natural language to another by a computer without human assistance. With globalization and increased demands in multilingual content delivery, machine translation is a growing requirement, if not absolute need. MT is considered a key enabling technology in the area of natural language processing.

- 3.

Intelligent dialog system - With the revival of AI technologies, research and development on intelligent dialog systems is one of the most active topics in both academia and industry. Intelligent dialog systems can be found in the following instances:

: language-based smart interface for machine execution;

: intelligent assistant for managing an explosive amount of the information generated in our lives; and

: an engaging system for interaction

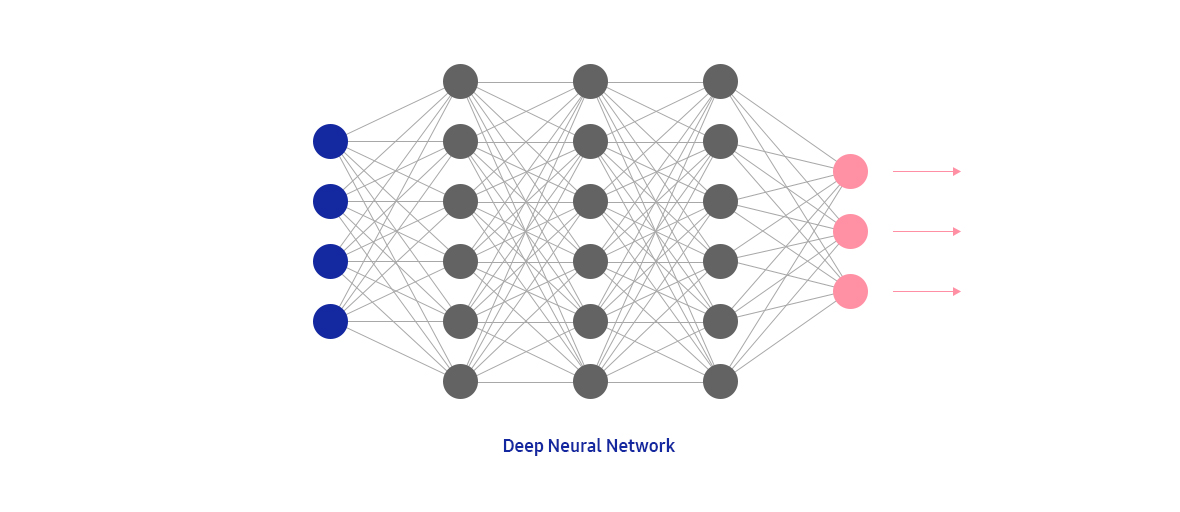

Deep learning powers many advances in machine learning today. Deep learning is comprised of artificial neural networks, inspired by the connections of neurons in our brain. Unlike the human brain, the algorithms behind deep neural nets require many layers, connections, and data propagation. Recent advancements show significant improvements, if not exceeding human-level performance in various tasks like object detection, image classification and speech recognition. Furthermore, we expect deep learning to provide greater contextual understanding and intelligent reasoning. Deep learning will continue to enable practical applications and provide highly capable assistance in autonomous vehicles, healthcare, intelligent agents, and robots.

Deep learning algorithms often require large labeled databases for training models. Preparing and organizing such a huge dataset costs a lot. This, in turn, motivates researchers to find more cost-efficient ways to use weakly-organized or unstructured data. One method of doing so involves generative models to learn data distribution from a smaller datasets and generate new data. Richard Feynman famously said, “What I cannot create, I do not understand.” In this breadth, generative deep learning algorithms will play a key role for machines to generate diverse datasets that can be used to advance dialogue engines, question & answer systems, exploration in reinforcement learning, etc. Today, the field of generative models are rapidly evolving with known applications like image denoising, super-resolution, and style transfer.

At SAIT, we are actively researching techniques that accelerate processing of deep learning on device while improving energy efficiency and increasing throughput without sacrificing application accuracy or increasing hardware costs. Popular techniques to reduce the number of weights and operations include pruning to increase sparsity in weights and developing compact deep learning models by reducing data bit-width using quantization. In addition, specialized deep learning hardware accelerators have been designed to translate the sparsity from pruning into improved energy efficiency by performing non-zero MAC operations and moving/storing the data in compressed form. SAIT strives to be at the forefront of implementing on device intelligence.

The time from materials conception to actual implementation takes anywhere from 20 to 30 years. As we near the physical limits of silicon, we are searching for more advanced materials that can positively impact the future of semiconductors, battery, display and many more applications that require novel materials. With artificial intelligence (AI), we hope to drastically reduce the timeline for materials discovery, development, and deployment.

We have seen many changes in materials research tools that integrate robotics. These advances allow researchers to rapidly synthesize and characterize a large number of samples – enabling high throughput discovery. Machine learning models can convert materials property data into actionable data, as well as assisting with experimental design. The ability to predict molecular properties from the structures using simulation rather than actual experimentation will save time and resources.

Rapid advances in artificial intelligence (AI) technology will significantly change and potentially disrupt how we conduct Research and Development (R&D). Human engineers can explore limited steps in a low dimensional space with a set amount of time. However, many interesting problems in R&D often require us to explore nearly all the possibilities in high dimensional spaces. AI can augment human productivity by discovering the optimal solution with much smaller number of trials by providing a more efficient way of exploration learnt from huge dataset.

SAIT is keenly interested in understanding how and leveraging AI-powered automation to enhance productivity in R&D. These improvements will enable advances in multidisciplinary research and hopefully improve outcomes by orders of magnitude.