With the advances in AI technology, the development of AI hardware has become increasingly crucial. SAIT is dedicated to creating diverse and innovative technologies to meet this demand.

We focus on cutting-edge R&D to drive the future of AI computing platform technology.

Furthermore, we are dedicated to introducing new computing technologies to the world that are expected to impact all aspects of our lives in the future. These include brain-inspired computing and quantum computing.

System semiconductors are the key technology in future-leading fields such as AI and HPC.

While the industry is increasingly in need of high-performance computing devices, current semiconductor technology faces a major challenge that indicates the end of Moore’s Law.

We intend to overcome this challenge by developing innovative semiconductors at the system level. Such a system semiconductor requires the convergence of various technologies that enable specialized architectures. Therefore, we seek technologies to design creative processors and build large-scale chiplets.

Using our creative processor solution, we are developing our own CPU architecture for given applications regarding power, performance, and area (PPA).

Eventually, we expect these solutions to have synergies that can transcend Moore’s Law.

A supercomputer performs at or near the highest operational rate for computers. Supercomputers have been used to achieve scientific and engineering breakthroughs. By handling extremely large databases and performing a large number of computations, they continue to push operational speed limits.

At any given time, there are well-publicized supercomputers that operate at extremely high speeds relative to all other computers. The race to have the fastest and most powerful supercomputer never ends and the competition does not seem to be slowing down anytime soon. With scientists and engineers using supercomputers for important tasks like studying diseases and simulating new materials, we hope that these advances will benefit all of humanity.

Samsung has its own supercomputing center, which plays an important role in the field of computational science and is applied to a wide range of tasks in various fields. The Samsung Supercomputer has been used to compute novel structures and properties of chemical compounds, polymers, and crystals. It has also served as a key element in accelerating Samsung’s artificial intelligence research in machine learning, deep learning, and data analytics.

The main research topics include new machine learning software, parallel computing, simulation workflows, automation and optimized deployment, novel material discovery and simulation, and the management of real-time computing grids.

Recent deep learning models require enormous memory bandwidths to read/store huge amounts of weights and activations from/to memory devices. However, these data movements are very expensive in terms of system energy and latency.

Traditional von Neumann architectures consist of processor chips specialized for serial processing and DRAMs optimized for high-density memory. The interface between the two devices is a major bottleneck that results in high power consumption. In addition, this limitation introduces latency and bandwidth constraints. Processing in memory is actively being researched as one way to solve these issues.

Processing in memory architecture integrates memory and processing units. The performance constraint problem can be solved by enabling computation closer to the data. Near/in-memory computing can dramatically reduce both the latency and energy of data transmission between memory and processing units.

SAIT is actively collaborating with Samsung business units to explore future memory architectures.

As AI applications become more integrated into daily life, deep learning workloads are being deployed on a range of devices, from mobile devices to servers. Moreover, with increasingly varied structural and operational features in neural networks, a diverse range of AI accelerators and systems is being researched. As systems become more varied in scale and heterogeneity, ensuring optimal system performance becomes more challenging.

To solve this problem, it is essential to research the system software, including programming models, compilers, and runtimes. SAIT is currently focusing on researching a software stack that can support extreme scales, ranging from edge devices to data centers, and that can handle the heterogeneity in which AI accelerators and in-memory processing units coexist. Additionally, we aim to unify software optimization techniques like parallelization and scheduling by generalizing the optimization problem for various target hardware and systems. Furthermore, recent research at SAIT involves exploring deep-learning-based compiler optimization to address the increasing search space of optimization problems as AI systems continue to grow in scale and complexity.

Artificial intelligence has recently made significant advancements, propelled by the explosion of vast amounts of data and enhanced computing power. However, it still grapples with numerous challenges. In particular, the energy required for computation is massive, and the evolution of computing architecture, which follows Moore‘s Law, struggles to keep pace with the rapid growth of AI models, hitting constraints like the von Neumann bottleneck. Moreover, although AI, especially large-scale language models, surpasses humans in some areas, it still falls short in replicating basic behaviors effortlessly performed even by the simplest organisms.

Neuromorphic computing offers a solution to this problem. SAIT is conducting research on brain reverse engineering to understand the structure and principles of biological neural systems. This research also extends to the design and development of learning and inference algorithms that reflect these insights, along with sensor and computing devices. The ultimate goal is to create a new, more efficient, and powerful computing architecture that can handle complex tasks, surpassing the existing von Neumann structures. This ambition aims to present a new computing paradigm through architecture innovation.

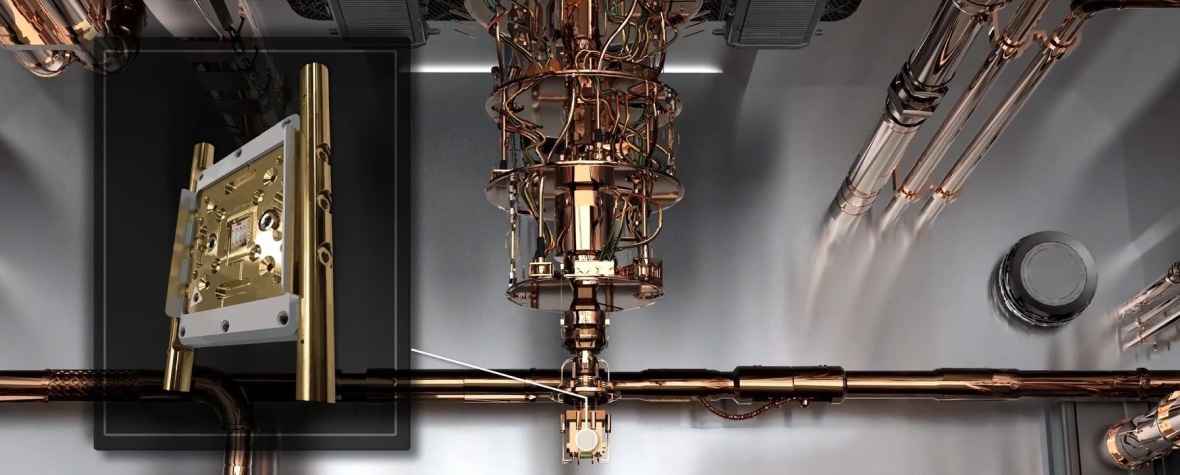

Quantum computing utilizes the laws of quantum physics to simulate and solve complex problems and is expected to bring revolutionary computing power to our daily lives. To make it come true, quantum computers should perform their operations in the fault-tolerant regime, and millions of qubits and the high-fidelity of gate operations are known to be required.

To accomplish this goal, our quantum computing team is conducting research on the development of techniques for fabricating, controlling, and measuring solid-state-based multiqubit chips, such as superconducting qubits and silicon spin qubits. We are taking a deep dive into the development of an integrated quantum processing unit consisting of a multi-qubit chip and a cryogenic CMOS qubit controller to build a scalable and reliable quantum computer.

We believe that this direction will make our journey to fault-tolerant quantum computing successful.

Quantum computers, which operate under the principles of quantum mechanics, can perform complex calculations at an incredibly fast pace. This poses a threat to current cryptographic systems that rely on complex mathematical principles and are thus difficult to crack using traditional computers. To address this issue, postquantum cryptography (PQC) is being extensively researched and is already commercially available. With advances in quantum-computing technology, PQC is expected to lead future security technologies.